From the Burrow

Post Holiday

I haven’t written for a while as I had a two week holiday and decided to take a break from most things except streaming. It was lovely and I got so much done that this can only really be a recap:

Performance

I made a profiler for CEPL that is plenty fast enough to use in real-time projects. I then used some macro ‘magic’ to instrument every function in CEPL, this let me target the things that were slowest in CEPL. I could happily fill a post on just this, but I will spare you that for now. Needless to say, CEPL is faster now.

I am contributing to a project called sketch by porting it to CEPL and I was really annoyed at how it was doing 90fps on one stress test and the CEPL branch was doing 20. After the perfomance work the CEPL branch was at 36fps but still sucking compared to the original branch. In a fit of confusion I commented out the rendering code and it only went up to 40fps..at which point I realized that, on master, I had been recording fps from a function that was called 3 times a frame :D so the 90fps was actually 30!

The result was pretty cool though as the embarrassment had probably pushed be to do more than I would have done otherwise.

GL Coverage

I have added CEPL support for:

- scissor & scissor arrays

- stencil buffers, textures & fbo bindings

- Color & Stencil write masks

- Multisample

and also fixed a pile of small bugs.

Streaming

Streaming is going well although removing the ‘fail privately’ from programming is something I’m still getting used to. (I LOVE programming for letting me fail so much without it all being on record)

This wednesday I’m going to try and do a bit of procedural terrain erosion which should be cool!

FBX

FBX is a proprietary format for 3D scenes which is pretty much an industry standard. It is annoyingly proprietary and the binary format for the latest version is not known. There is a zero-cost (but not open source) library from autodesk for working with fbx files but it’s a very c++ library so using it from the FFI of most languages is not an option.

Because this is a pita I’ve decided to make the simplest solution I can think of, use the proprietary library to dump the fbx file to a bunch of flat binary files with known layout. This will make it trivial to work with from any language which can read bytes from a file. I’m specifically avoiding a ‘smarter’ solution as there seem to be a bunch of projects out there in various states of neglect and so I am making something that, when autodesk inevitably make a new version of the format, I can change without much thought.

The effect on my life

It’s odd to be in this place, 5 years of on/off work on CEPL and this thing is starting to look somewhat complete. There is still a bunch of bugfixes and newer GL features to add but the original vision is pretty much there. This seems to have freed up the mind to start turning inwards again and I’ve been in a bit of a slump for the last week. These slumps are something I have become a bit more aware of over the last year and so have become a little better at noticing.. Still, as this is about struggles as well as successes and as the rest of this post is listing progress it seemed right to mention this.

Peace folks

input given

I’m late writing this week but there has been progress.

So after the PBR fail I knew I had to put some energy in different places for a week as I needed content for the stream. I knew dealing with input was coming soon and my input system was ugly, so that was an obvious candidate.

This is probably the 4th attempt I’ve made of making an event system. The originals were to varying degrees attempts on the callback/reactive input systems but they suffered a few key problems:

- Callbacks can be hard to reason about when there are enough of them

- Subscribing to something means that the provider now holds a reference to the subscribee, which means it cant be freed if you forget to detach it (this is an issue as we experiment in the repl a lot so throwing things away should be easy)

- Input propagation can end up driving the per frame execution (more on this in a minute)

- In the versions where I made immutable events the allocation costs per frame could be very high[0]

The third one was particularly tricky, and it only became apparent to me when I had some specific use cases:

The first one was with mouse position events. The question is, which event was the last one for that frame. Let’s say I’m positioning something based on mouse position and that causes a bunch of work in other parts of the game. If I receive 10 move events in a frame I don’t want to do that work 10 times so there is going to be some kind of caching and I need to know when I have received the last event. This is a bit artificial and there are plenty of strategies around it but caching is something that ends up appearing a lot in the event propagating approach.

Next was when I had made a fairly strict entity component system and was trying it out in a little game. In this system you processed the components in batches, so you would process all of the location components and then all of the renderable components, etc. This posed a problem as events needed to be delivered to components but events were pumped at the beginning of the frame and components were processed later. I didn’t want to break the model so again I fell back to caching.

I needed something different and so the latest version, skitter, is much simpler.

First some design goals:

- The api is pull based[1]

- There must only be allocations happening when new input-sources (like mice, gamepads) are added or when new controls (like buttons, scroll wheels) are added to input sources.

- skitter shouldn’t need to know about any specific input api, it’s only dealing in its own state.

- It should be possible to both redefine controls live OR tell the system to make them static and optimize the crap out of them.

In the system you now define a control like this:

;; name ↓↓ lisp data type ↓↓ ↓↓ initial value

(define-control wheel (:static t) single-float 0.0)

This is like defining a type in the input system, you give it a name, tell it the kind of data it holds and it’s initial value.

the :static t bit means this will be statically typed and will not be able to be changed live

this one is slightly different:

(define-control relative2 (:static t) vec2 (v! 0 0) :decays t)

Here we have told it to ‘decay’. This means that each frame the value returns to the initial value. This is because you don’t get an event from (all/any?) systems to tell you that something has stopped happening.

We can now make an input source

(define-input-source mouse ()

(pos position2)

(move relative2)

(wheel wheel2)

(button boolean-state *))

Here a mouse has an absolute position, a relative movement, a possibly 2d scroll wheel and buttons (we dont know how many so we say *)

We can then call (mouse-pos mouse-obj) to get the position.

You can then write code that takes the events from your systems (sdl2, qt, glfw, etc) and puts them into the source by using functions like (skitter:set-mouse-pos mouse-obj timestamp new-position)

Bam cross system event management. It’s dirt simple to use and the hairy logic is all handled by a few macros internally.

BUt we have missed one thing that callbacks were good for, events over time. Reactive approaches have this awesome way of composing streams of events into new events and, whilst I can’t have that, I do want something. The caching in our system poses a problem as we now only have the latest event. So rather that bringing the events to the processing code we will bring the processing to the event (or something :D).

What we do is add a logicial-control to our input-source. Maybe we want double-click for our mouse, we will make a kind of control with it’s own internal state that sets itself to true when it has seen two events within a certain timeframe:

(define-logical-control (double-click :type boolean :decays t)

((last-press 0 integer))

((button boolean-state)

(if (< (- timestamp last-press) 10)

(progn

(setf last-press 0)

(fire t))

(setf last-press timestamp))))

This is made up & untested code, however how it should work is that we have new kind of control called double-click, it’s state is boolean. It has a private field called last-press which holds the time since the last press. It depends on the boolean-state of another control and internally we give this thing the name ‘button’. The if is going to get evaluated every time one of the dependent controls (button in this case) is updated. fire is the function you call to in a logical-control to update your own state.

What is nice with this is that you can then define attack combos as little state-machines, put them in logical controls and attach them to the controller object. You can now see the state of Ryu’s Shoryuken move in the same way you would check if a button is being held down.

The logical-control is still very much a WIP but I’m happy with the direction it is going.

That’s all for input for now, I have been doing other stuff this week like making a tablet app which lets you use sliders and pads to send data to lisp [2] and of course more streaming[3] but it’s time for me to go do other stuff.

This weekend I am going to have a go at making a little RPG engine with all this stuff. Should be fun!

[0] you could of course cache event objects, but then you need n of each type of event object (because we may dispatch on type) and we can’t make them truly immutable.

[1] I made a concession that you can have callbacks as they are used internally and it hopefully means people won’t try and hack their own in on top of the system.

[2]

[3]

Swing and a miss

So this last weekend I worked on PBR again as there was a wonderful new tutorial out. The good news is that it cleared up a lot of points of confusion for me. The bad news is my version is still incorrect :(

I have been through every damn line of glsl to make sure that the PBR implementation itself matched the tutorial..which leaves the major possibility that it was something else all along; that some part of the deferred pass is incorrect.

It would explain a lot but also be crazy annoying.

The other possibility of course is that my implementation of PBR doesn’t match the tutorial but Im having an increasingly hard time believing that.

Other than that, streaming is going well and I am doing another one tonight, being forced to learn something well enough to explain it is good stuff.

Anyhoo, that’s this week, hopefully next time it will be better news :D

Ughhh

I’ve been slogging through some really boring stuff these last few days. Boring but necessary.

People have been asking for a stream on using Varjo and so that’s what I’m doing tomorrow. However I’ve been more and more bothered by the fact I would do this stream and, within a month, it would be obsolete as knew I wanted to change how things were structured. This really meant I had to bite the bullet and get it done.

It’s done now but these changes affected every project I work on so naturally testing took some time. The good news is that all of these changes are in the release branches for the various projects and so will ship with the next quicklisp cycle.

Next up was documentation for CEPL. I’ve been shipping a bunch of new goodness recently so plenty of things either needed documenting or tweaking. Also the documentation generator I use now supports markdown so I went through everything reformatting and editing things.. that was terrifically boring..I finished that around 4am on Sunday. You can find the result here

Then I was informed by the lovely quicklisp folks that a couple of my libraries werent building..damn. I hadnt tested those in the rush of everything else. That took an hour to fix but it’s good now.

I also wrote the documentation for my cl-soil wrapper library (MORE BORING!) which can be found here

Making good product is hard man, I had a 3 day weekend and feel tired and a bit grumpy (oh poor me <tiny violin> :p) but at least tomorrows stream will make sense.

…

Except.

I may have worked out how to have &rest arguments (varargs in other languages) in Vari so I may try and hack that in tonight..which means more testing :D I’m a glutton for punishment.

There were also some other fixes this week but not interesting enough to be worth writing about now.

Until next week, Ciao.

2 days late again

But there is news at least!

Varjo

I changed varjo’s if form so that if certain conditions are right it will emit a ternary operator instead of a full if statement in the glsl

(glsl-code

(translate

(make-stage :vertex '((a :int)) nil '(:450)

'((let ((x (if (< a 10)

a

(- a))))

(v! 1 2 3 4))))))

"// vertex-stage

#version 450

layout(location = 0) in int A;

void main()

{

int X = (A < 10) ? A : (- A);

gl_Position = vec4(float(1),float(2),float(3),float(4));

return;

}

"

Aside from this I fixed a few bugs and made little tweaks such as; if you use -> in a name in lisp it becomes _to_ in glsl. A tiny touch but it makes the generated code nicer to read.

Nineveh

This last week I added color space conversion gpu-functions and also functions for generating mesh data for common primitives (sphere, box, cone, etc)

Streaming

Here are my last 2 streams. I’m getting more used to this each time and it’s lovely seeing a few regulars in the chat, this week was especially lovely as there were lots of questions. Not much else to say on this other than I’m gonna try and keep up the ‘stream every Wednesday’ goal.

Ciao

Streaming!

This’ll be quick post, both because I’m a day late posting this and also because I’m streaming in an hour.

Staying on that subject, I streamed a thing! I’m pretty stoked as it seemed to go well (despite nerves) and the response has been great. You can find the recording below.

Other than that I’ve been doing some research for future streams, fixing bugs in Varjo and adding some features to various libraries. I have a day off tomorrow so I’m thinking of hammering on with color conversion functions and also with mesh generation.

I’ve also been putting some time into porting sketch to CEPL but I’m still struggling with performance. The original had minimal state changes (1 program, 1 vao) and used buffer streaming to push tonnes of verts every frame.. however it makes >4000 draw calls a frame. This use case runs into some rather slow parts of CEPL so I’ll need to do work there. More on this next week

Right, time to go.

Ciao

It's been a good week

Some other projects have been accepted into the lisp package manager.

I put out 2 new videos:

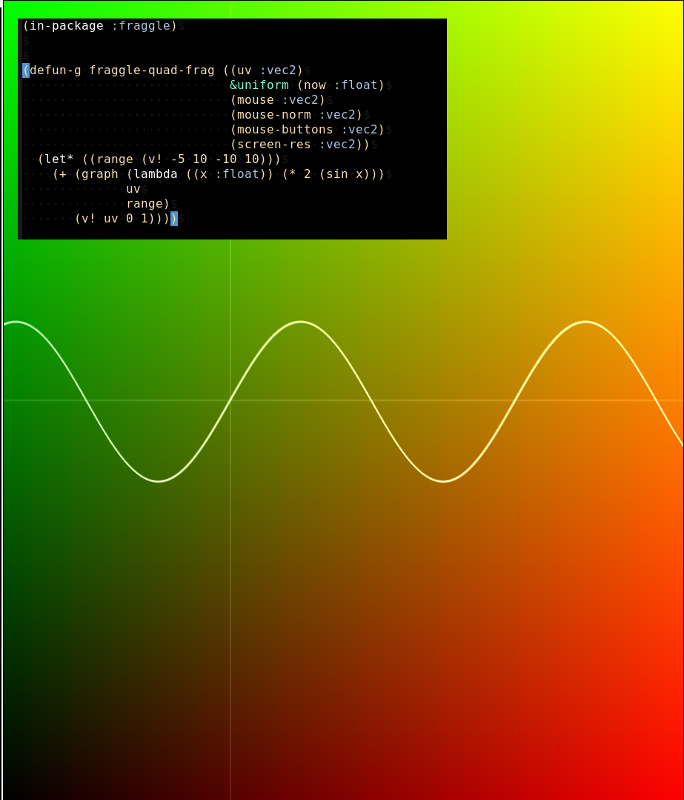

I have added graphing functions to Nineveh my ‘standard library’ of gpu functions for CEPL

And I’ll be streaming here this Wednesday 18:00 UTC. The plan is to learn some graphic programming stuff by using lisp to take things apart on the stream. This week I’m going to start on the road to understanding noise functions by taking apart some gpu hashing functions. I’m a bit nervous but if it goes well I’m gonna try doing these weekly.

That’s all for now. Seeya.

The stocking of Nineveh

What a week. I’m a bit exhausted right now as I’ve been pushing a bit, but the results are fairly pleasing to me.

GLSL Quality

First off I did a bunch of work in making the generated GLSL a bit nice to read. The most obvious issue was that, when I returned multiple values I would created a bunch of temporary variables. The code was all technically correct but it didn’t read well. So now like this:

(defun-g foo ((x :float))

(let ((sq (* x x)))

(values (v2! sq)

(v3! (* sq x)))))

can compile to glsl like this

vec2 FOO(float X, out vec3 return_1)

{

float SQ = (X * X);

vec2 g_G1075 = vec2(SQ);

return_1 = vec3((SQ * X));

return g_G1075;

}

Which is fairly readable. 1 temporary variable is still used, but that is to maintain the order of evaluation that was expected.

return is one of areas of the compiler with the most magic as we generate different code based on the context the code is being compiled in. It could be a return from a regular function (like above), or it could be from a stage in which case we need to turn it into out interface block assignments etc. This meant it took a bunch of testing to get it correct.

Compiler cleanup

For a while I’ve been trying to clean up the code around how multiple return values are handled. I had a few false starts here (which ended in force-pushing away a day’s worth of work) but it’s done now. They are still plenty of scars in the codebase but the healing can at least begin :p

Bugs in release

I then found a bug & a regression in the code I had prepped for the next release (into the common lisp package manager). As the releases are not made on a fixed cadence I wasn’t sure how long I had so I dived into that. It turned out one of those cases where even good type checking wouldn’t have helped. I had removed some code that looked redundant but was actually resolving the aliased name of a type.

Compile in every direction

With that done I had sat down for some well earned farting around. Last week I had knocked up a simple shadertoy like thing[0] so I went back to working through that.

I got to this part where it was recommending going and implementing various curve functions from folks like IQ & Golan Levin. So dutifully I started.. but I was getting this nagging feeling that I should have more noise functions. So after porting some functions from the chaps above I found [this article] (https://briansharpe.wordpress.com/2011/10/01/gpu-texture-free-noise/) on texture-free noise.

Needless to say I got hooked on that, and then finding out out he had a whole library I decided that I needed it, all of it, in lisp :)

So that was the weekend. I polished up my naive GLSL -> Lisp translator and got cracking porting. Having this much code pouring in was a really good test-case for the compiler and so I got to clean up a few more bugs in the process.

One interesting addition to the compiler was to allow floating point numbers as strings in the lisp code. It looks like this: (+ (v4! 1.5) (v4! "1.4142135623730950488016887242097")) the reason for this is that some floating point numbers used in shaders are used for their exact bit-pattern rather than strictly for the value itself. This optional string syntax avoid the risk of the host lisp using a different floating point representation a messing up the value in some way.

The first pass of the import is done. Next is cleanup and documentation but that is less critical. My standard library Nineveh is looking slightly better stocked now :)

Validation

One thing that felt great was noticing that, in the naive GLSL -> Lisp translator and got cracking porting. Having this much code pouring in was a really GLSL noise library, there were cases where it would be nice to switch out the hashing function used in a noise function. In the GLSL it was done with comments but I realized this was a PERFECT place to use first class functions. So here it is:

(defun-g ctoy-quad-frag ((uv :vec2)

&uniform (now :float)

(mouse :vec2)

(mouse-norm :vec2)

(mouse-buttons :vec2)

(screen-res :vec2))

;; here is the hash function we pass in ↓↓↓↓↓↓

(v3! (+ 0.4 (* (perlin-noise #'sgim-qpp-hash-2-per-corner

(* uv 5))

0.5))))

In that code we call perlin-noise passing in the hashing function we would like it to use. And here is the generated GLSL.

// vertex-stage

#version 450

uniform float NOW;

uniform vec2 MOUSE;

uniform vec2 MOUSE_NORM;

uniform vec2 MOUSE_BUTTONS;

uniform vec2 SCREEN_RES;

vec3 CTOY_QUAD_FRAG(vec2 UV);

float PERLIN_NOISE(vec2 P);

vec2 PERLIN_QUINTIC(vec2 X2);

vec4 SGIM_QPP_HASH_2_PER_CORNER(vec2 GRID_CELL, out vec4 return_1);

vec4 QPP_RESOLVE(vec4 X1);

vec4 QPP_PERMUTE(vec4 X0);

vec4 QPP_COORD_PREPARE(vec4 X);

vec4 QPP_COORD_PREPARE(vec4 X)

{

return (X - (floor((X * (1.0f / 289.0f))) * 289.0f));

}

vec4 QPP_PERMUTE(vec4 X0)

{

return (fract((X0 * (((34.0f / 289.0f) * X0) + vec4((1.0f / 289.0f))))) * 289.0f);

}

vec4 QPP_RESOLVE(vec4 X1)

{

return fract((X1 * (7.0f / 288.0f)));

}

vec4 SGIM_QPP_HASH_2_PER_CORNER(vec2 GRID_CELL, out vec4 return_1)

{

vec4 HASH_0;

vec4 HASH_1;

vec4 HASH_COORD = QPP_COORD_PREPARE(vec4(GRID_CELL.xy,(GRID_CELL.xy + vec2(1.0f))));

HASH_0 = QPP_PERMUTE((QPP_PERMUTE(HASH_COORD.xzxz) + HASH_COORD.yyww));

HASH_1 = QPP_RESOLVE(QPP_PERMUTE(HASH_0));

HASH_0 = QPP_RESOLVE(HASH_0);

vec4 g_G5388 = HASH_0;

return_1 = HASH_1;

return g_G5388;

}

vec2 PERLIN_QUINTIC(vec2 X2)

{

return (X2 * (X2 * (X2 * ((X2 * ((X2 * 6.0f) - vec2(15.0f))) + vec2(10.0f)))));

}

float PERLIN_NOISE(vec2 P)

{

vec2 PI = floor(P);

vec4 PF_PFMIN1 = (P.xyxy - vec4(PI,(PI + vec2(1.0f))));

vec4 MVB_0;

vec4 MVB_1;

//

// Here is that hashing function in the GLSL

// ↓↓↓↓↓↓↓↓↓↓↓↓↓↓↓↓↓↓↓↓

MVB_0 = SGIM_QPP_HASH_2_PER_CORNER(PI,MVB_1);

vec4 GRAD_X = (MVB_0 - vec4(0.49999));

vec4 GRAD_Y = (MVB_1 - vec4(0.49999));

vec4 GRAD_RESULTS = (inversesqrt(((GRAD_X * GRAD_X) + (GRAD_Y * GRAD_Y))) * ((GRAD_X * PF_PFMIN1.xzxz) + (GRAD_Y * PF_PFMIN1.yyww)));

GRAD_RESULTS *= vec4(1.4142135623730950488016887242097);

vec2 BLEND = PERLIN_QUINTIC(PF_PFMIN1.xy);

vec4 BLEND2 = vec4(BLEND,(vec2(1.0f) - BLEND));

return dot(GRAD_RESULTS,(BLEND2.zxzx * BLEND2.wwyy));

}

vec3 CTOY_QUAD_FRAG(vec2 UV)

{

vec4(MOUSE_NORM.x,MOUSE_BUTTONS.x,MOUSE_NORM.y,float(1));

return vec3((0.4f + (PERLIN_NOISE((UV * float(5))) * 0.5f)));

}

void main()

{

CTOY_QUAD_FRAG(<dummy v-vec2>);

gl_Position = vec4(float(1),float(2),float(3),float(4));

return;

}

I’m stoked at how natural the function call looks in the resulting code. Also it’s extra func that the function passed in has 2 return values and everything just worked :)

For completeness here are a couple of the other functions involved

(defun-g perlin-noise ((hash-func (function (:vec2) (:vec4 :vec4)))

(p :vec2))

;; looks much better than revised noise in 2D, and with an efficent hash

;; function runs at about the same speed.

;;

;; requires 2 random numbers per point.

(let* ((pi (floor p))

(pf-pfmin1 (- (s~ p :xyxy) (v! pi (+ pi (v2! 1.0))))))

(multiple-value-bind (hash-x hash-y) (funcall hash-func pi)

(let* ((grad-x (- hash-x (v4! "0.49999")))

(grad-y (- hash-y (v4! "0.49999")))

(grad-results

(* (inversesqrt (+ (* grad-x grad-x) (* grad-y grad-y)))

(+ (* grad-x (s~ pf-pfmin1 :xzxz))

(* grad-y (s~ pf-pfmin1 :yyww))))))

(multf grad-results (v4! "1.4142135623730950488016887242097"))

(let* ((blend (perlin-quintic (s~ pf-pfmin1 :xy)))

(blend2 (v! blend (- (v2! 1.0) blend))))

(dot grad-results (* (s~ blend2 :zxzx) (s~ blend2 :wwyy))))))))

(defun-g sgim-qpp-hash-2-per-corner ((grid-cell :vec2))

(let (((hash-0 :vec4)) ((hash-1 :vec4)))

(let* ((hash-coord

(qpp-coord-prepare

(v! (s~ grid-cell :xy) (+ (s~ grid-cell :xy) (v2! 1.0))))))

(setf hash-0

(qpp-permute

(+ (qpp-permute (s~ hash-coord :xzxz)) (s~ hash-coord :yyww))))

(setf hash-1 (qpp-resolve (qpp-permute hash-0)))

(setf hash-0 (qpp-resolve hash-0)))

(values hash-0 hash-1)))

All credit of implementation goes to Brian Sharpe for this excellent noise library

Peace

OK I’ll stop now and spare you more details. I’m just so happy to see this behaving.

Have a great week.

Ciao

[0] https://github.com/cbaggers/fraggle/blob/master/main.lisp

Trials and Tessellations

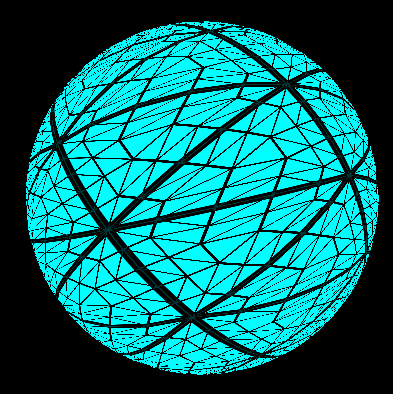

Tessellation works! I was able to replicate the fantastic tutorial from The Little Grasshopper and finally get a test of a pipeline with all 5 programmable stages.

This took a little longer than expected as my GPU complained on the following shader

Error compiling tess-control-shader: 0(6) : error C5227: Storage block FROM_VERTEX_STAGE requires an instance for this profile 0(13) : error C5227: Storage block FROM_TESSELLATION_CONTROL_STAGE requires an instance for this profile

#version 450

in _FROM_VERTEX_STAGE_

{

in vec3[3] _VERTEX_STAGE_OUT_1;

};

layout (vertices = 3) out;

out _FROM_TESSELLATION_CONTROL_STAGE_

{

out vec3[] _TESSELLATION_CONTROL_STAGE_OUT_0;

};

void main() {

vec3 g_G1327 = _VERTEX_STAGE_OUT_1[gl_InvocationID];

vec3 g_GEXPR01328 = g_G1327;

_TESSELLATION_CONTROL_STAGE_OUT_0[gl_InvocationID] = g_GEXPR01328;

float TESS_LEVEL_INNER = 5.0f;

float TESS_LEVEL_OUTER = 5.0f;

if ((gl_InvocationID == 0))

{

gl_TessLevelInner[0] = TESS_LEVEL_INNER;

gl_TessLevelOuter[0] = TESS_LEVEL_OUTER;

gl_TessLevelOuter[1] = TESS_LEVEL_OUTER;

gl_TessLevelOuter[2] = TESS_LEVEL_OUTER;

}

}

As far as I can tell, the above code is valid according to the spec, but my driver wasn’t having any of it[0]. This meant I had to do some moderate code re-jiggling. You can’t just add an instance name to the blocks as then you get:

Error Linking Program 0(13) : error C7544: OpenGL requires tessellation control outputs to be arrays

Grrr. This makes total sense according to the spec though, so grr goes back to error C5227. FUCK YOU C5227.

Anyway that got fixed and this happened

With that done I made sure the same worked using CEPL’s inline glsl stages and tested a bunch.

This left me at an odd point. CEPL has a lot less gaps in the API that it used to[1], it feels kind of ready for me to use.

So I sat down with The Book of Shaders and got started. I simply can’t give that project enough praise, it’s wonderfully written and remains interesting whilst also pushing you just enough to really digest the details of what they want you to learn. I made a little shadertoy substitute[2] and started doing the exercises. I have to say I’m pretty proud with how fun it all felt, it’s been a lot of work to get something that is lispy but doesn’t feel hampered by being the non-native language, so those hours of play were very vindicating.

Within a very short time The Book of Shaders is getting you to amass a collection of helper functions for your shaders. I already have a (very wip) project called Nineveh for this purpose, so it’s time to start working on that again! I want a kind of ‘standard library’ for CEPL, somewhere you can find a pile of implementations of common functions (e.g. noise, color conversion, etc) to either use to at least use as a starting point.

There are often cases where libraries like this fall short as you want a slight variation on a shader. Say for example you like their FBM function but want one more octave of noise. I’m hoping macros can help a little here as we can provide something that generates the variant you need.

This is likely naive but it’ll be fun to try.

Right, that’s my lot for now. Seeya!

[0] I was meant to be using the compatibility profile too so I’m not sure what was up

[1] See last weeks post for what remains

[2] There’s about 50 lines not shown here which handles input & screen events.

(in-package :ctoy)

(defun-g ctoy-quad-vert ((pos :vec2))

(values (v! pos 0 1)

(* (+ pos (v2! 1f0)) 0.5)))

(defun-g ctoy-quad-frag ((uv :vec2)

&uniform (now :float)

(mouse :vec2) (mouse-norm :vec2) (mouse-buttons :vec2)

(screen-res :vec2))

(v! (/ (s~ gl-frag-coord :xy) screen-res) 0 1))

(def-g-> draw-ctoy ()

:vertex (ctoy-quad-vert :vec2)

:fragment (ctoy-quad-frag :vec2))

(defun step-ctoy ()

(as-frame

(map-g #'draw-ctoy (get-quad-stream-v2)

:now (* (now) 0.001)

:mouse (get-mouse)

:mouse-norm (get-mouse-norm)

:mouse-buttons (get-mouse-buttons)

:screen-res (viewport-resolution (current-viewport)))))

(def-simple-main-loop ctoy

(step-ctoy))

Checking in

So this week I don’t have much technical to write about, the main things I’ve been doing are

- Bug-fixing/refactoring

- Getting everything into master and then finally

- Getting the next release ready.

This month’s release will bring a huge slew of changes into the compiler, starting with all the geometry shader work I’ve been doing. I’ve also landed the new backwards compatible host API for CEPL which brings multiple window support among other things.

In the next 2 weeks I want to have finished my latest cleanup of Varjo and added support for tessellation stages.

After this I’ve reached an interesting place with regards to CEPL as I think the major features will be done. I’ve still got a few things I want support for before I call it v 1.0:

- Scissor

- Stenciling

- Instanced Arrays

- Multiple Contexts

And each of those seem like fairly contained pieces of work. Even though there are things in GL I haven’t considered supporting yet (like transform feedback) I don’t think I’ve ever felt this close to CEPL being ‘ready’.. It’s an odd feeling.

After that I’d like to focus of stability, performance and on Nineveh which is going to be my ‘standard library’ of GPU functions and graphics related stuff.

Until next week,

Peace