From the Burrow

Getting CEPL into Quicklisp

Sorry I havent been posting for ages. There has been plenty of progress but I have been lax writing it up here.

The biggest news up front is that CEPL is now in quicklisp, this has been a long time coming but now it’s much now easier to get CEPL set up and running.

To do this means cleaning up all the cruft and experiments that were in the CEPL repo. The result is that like GL CEPL has a host that provides the context and window, the event system is it’s own project, as does the camera, and much else is cleaned and trimmed.

CEPL can now potentially have more hosts than just SDL2 though today it remains the only one. I really want to get the GLOP host written so we can have one less C dependancy on windows and linux.

More news coming :)

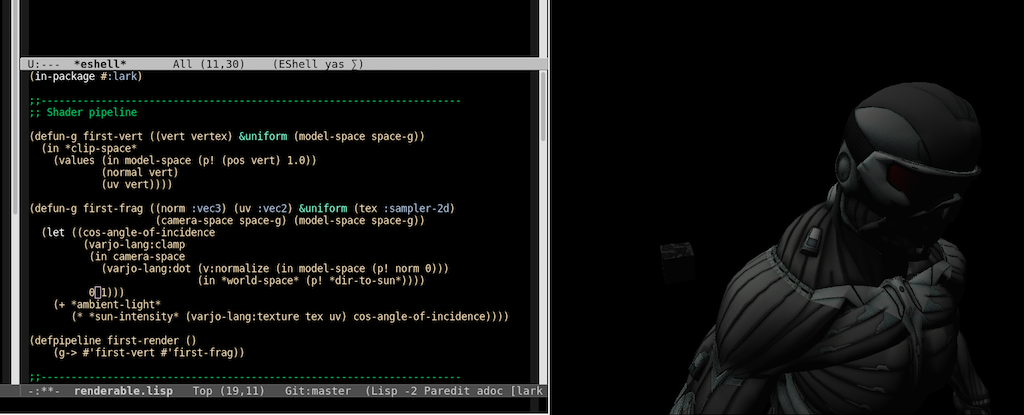

OH hell yes!

The code one the left is the render pipeline for the image on the right. All can be editted live. I love this.

Also using the new spaces feature. More details coming soon!

p.s. It’s only super basic shading but that fact the features are working means that I can focus on the fun stuff!

Hammering out the api

Where we left off

As I said the other day I have #’get-transform working, albeit inefficiently, and after that it was time to think about how these features should be used. Of course we have in blocks, but there were questions (that I mentioned last time) like:

- What happens if you try to pass a type like position or space (that don’t exist at runtime) from one shader stage to another?

- Can these kind of types be used in gpu-structs?

- Should stages have an implicit space?

- If you pass up a position as a uniform, where does it’s space come from?

Questions like this have been taking a lot of time to sort out. It’s one of those funny things that, when the language doesn’t limit you and your goal is ‘the best’ programming experience, then any of hundreds of crazy solutions are possible so choosing becomes harder.

Maybe this

One possibility was to make space a first class concept in cepl, so all gpu-arrays and such would have a space. That would mean that I could have the terrain vertex data in a gpu-array in world space. e.g.

(make-gpu-array terrain-data :type 'terrain-vert :space world-space)

and then the vertex shader could technically look like this

(defun-g terrain-vertex-shader ((vert terrain-vert))

(values (pos vert)

(normal vert)

(uv vert)))

Now! because the vertex shader implicitly takes place in clip space and cepl knows that the vert data is in world-space then it could add the world->clip space transforms for you.

This sounds kinda awesome, and with cepl I can totally make this work… but I decided against it. Why? Well because it bakes a certain use-case into a data-structure, here is a simple example where it breaks down.

I have the data for a bush, I will instance this a thousand times across a landscape, each tree will have a different model->world transform. At this point what is the space associated with the bush data doing? It’s not needed yet it’s ‘attached’ to the data.

An hour of wrangling around that idea made it seem like this idea just added complexity so, for now at least, it’s out.

A few more ideas ending up in a similar place. Namely:

cepl, in some places, is reaching the limit of how much it can help without becoming an engine.

this is an interesting feeling in itself.

So after all that I decided the healthiest thing was to leave that for a bit and focus on the cpu side of the space feature.

Goblins of the brain

One thing that was odd was that I had this nagging feeling that I had something wrong with my concept of how spaces should be organized so I’ve been staring at books & tutorials again trying to find the something I had missed. Quite suddenly the following popped into my head:

I have assumed that all spaces have one parent. I have conflated hierarchical and non-hierarchical relationships

And now I need to try and explain what I mean by that :)

The whole point of making a space graph rather than a scene graph was to avoid the idea that game entities would be part of the graph, which I believe is a massive code-smell.

I want only spaces to be in the graph, so far so good.

I define my spaces with some transforms relative to a parent space. Seems OK. But is it? I realized I ran into some issues when looking at the eye-space to clip-space relationship.

There isn’t a clear parent. Changing the position of the eye (game camera) doesn’t transform clip-space in the way moving a table affects the objects on the table. Also defining eye-space as a child of clip space feels weird.

What I had was what I’m calling a non-hierarchical relationship. There is a valid transform between them but it isn’t defined in terms of a parent-child relationship. Examples of hierarchical things like arms and hands, where moving the arm moves the hand.

So what I want now is:

- lots of space-trees, which are hierarchical. They are defined in a parent-child way and are great for character limbs, foliage branches etc.

- non-hierarchical relationships between the root nodes of those space-trees.

So is this special? Nope :) It more my journey than something totally new. The first part of the definition from Wikipedia says

A tree node (in the overall tree structure of the scene graph) may have many children but often only a single parent

but later clarifies:

It also happens that in some scene graphs, a node can have a relation to any node including itself, or at least an extension that refers to another node.

OpenSceneGraph has multiple parents…which to me sounds like a bit of a terminology mistake, what does a spatial hierarchy with many parents mean?

Also having graph pointers sounds scary, they would have to be quite strict in what they can do..and if they have strict behavior then surely there can be a better term.

Some other engines avoid the issue somewhat by keeping the scene graph to the hierarchical stuff and let the subject of clip-space and such be a concern of shaders. My brief look a Unity seems to suggest this approach.

Whilst that last one avoids confusion at first it feels like you kind of only defer the issue to another part of the code-base. I would hope we could come up with some analogy in code that extends across these domains. This may not be as easy at first but should be simpler in the end.

And Now

Since that realization I’ve been sketching out my plan for how to make this. It isnt a massive overhaul which is nice and there seem to be some obvious places to start optimizing later on.

For now I’m just going to get something slow working and play with it to see what happens, but I have to say I feel like a mental blockage has been free’d and I’m pretty optimistic about what comes next.

Space progress

Having got something working on the shader generation side I have gone back to the cpu side of the story.

When the shader compiler sees a position changing space like this:

(in s

(in w

(p! (v! 1 1 1 1)))

0)

it turns it into glsl something like this:

W-TO-S * vec4(1,1,1,1)

Where W-TO-S is a matrix4 which is to be uploaded. On the cpu side cepl adds something like this

(let ((w-to-s (spaces:get-transform w s)))

(cepl-gl::uniform-matrix-4ft w-to-s-uniform-location w-to-s))

Up until now the get-transform function has just returned the identity matrix, so it was time to flesh that out.

I now have a implementation that looks like it works but is incredibly inefficient. It will do the job for now, and as cepl is already uploading the result, the feature should be working now.

This leaves the question of what good code looks like when using this feature. I have a few things in my mind already that need answering:

- What happens if you try to pass a ephemeral type like position or space from one shader stage to another?

- Should stages have an implicit space (I think so)

- Can ephemeral types be used in gpu-structs (I think this should be made to work)

- If you pass up a position as a uniform, where does it’s space come from?

- If you made a gpu-struct slot a position, should the space come from the stage?

- If there are implicit spaces in shaders then we need implicit uniform upload. Do we just use the implicit-uniforms feature for this? How does that interact with the compiler’s space-transform pass.

This should keep me busy :)

I dun a artikale

I work on Fuse as my day job (which is awesome) and have to deal a lot with interop with java/objc (which are not :D).

In the course of making, remaking, refining and smashing-my-head-against bindings I came to some conclusion about bindings. This article is about the work Olle (another awesome fuse employee) and myself did and what the first result looks like.

Pro-crastinating

- Found some bug

- looked at behind the scenes code

- it looked complex

- procrastinated by writing doc strings for bunch of functions

- now understand the process, know where to start fixing the issue.

- regret doing this less often

Booya! Space checking lives!

Oh progress feels so good!

Ok, now given some code like this:

(defun-g vert ((vert pos-col) &uniform (s space-g) (w space-g))

(in s

(in w

(p! (v! 1 1 1 1)))

0)

(values (v! (pos vert) 1.0)

(col vert)))

The in portion does nothing useful, it purly exists so that there is a position (the p!) which is created inside the scope of the space w. That position is returned into the scope of space s. To ensure this is valid the compile needs to multiple the position by some kind of w space to s space matrix.

Lets look at the compile output (it’s been cleaned up for readability)

#version 330

layout(location = 0) in vec3 fk_vert_position;

layout(location = 1) in vec4 fk_vert_color;

out vec4 OUT_34V;

uniform mat4 W-TO-S;

void main() {

(W-TO-S * vec4(1,1,1,1));

0;

gl_Position = vec4(fk_vert_position,1.0f);

OUT_34V = fk_vert_color;

}

Here we can see that there is no mention of w, s, positions or spaces. The compiler has created a uniform to hold the w space to s space transform and multiplied it with the vec4.

On the cpu side we can find this code

...

(let ((w-to-s (spaces:get-transform w s)))

(when w-to-s

(let ((cepl-gl::val w-to-s))

(when (>= w-to-s-uniform-location 0)

(cepl-gl::uniform-matrix-4ft w-to-s-uniform-location cepl-gl::val)))))

...

Here we see that the original spaces w & s are passed to the get-transform function which returns the matrix4 which is uploaded.

As you would imagine there are still plenty of little bugs to clean up, but those feel like nothing compared to how much work this has taken. I’m pretty stoked right now.

I’m looking forward to posting a proper example of how you would use this in regular shaders soon.

That’s enough for this week

Ciao!

Spaces still in progress

Bloody hell that month went fast. Well the obvious is that the spaces feature is not done. I lost a week an a half to business, but it was in palo alto so I got to visit the computer history museum which was badass.

I’m also stoked at how much I did get done in that time. Varjo progress is marching onwards and the parts of cepl I found hairy are slowly becoming less so.

The big changes are happening in varjo purely because varjo is outgrowing the simple model that has served it up to now. Right now I’m making sure that a varjo compile pass not only gives you the result (and metadata) but also includes the ast. Varjo is currently single pass (and that was fine) but cepl wants to be able to use varjo in a multipass fashion. It needs the ast so it can create the varjo code for the second pass (where, in this case, spaces are turned into matrices).

It is likely that, in time, varjo will just become properly multipass. But I’m going to do this in steps as I don’t want to be working on features for features sake, I want the space feature to drive this.

More news as it comes

Flow analyzer complete enough

So this week I got the flow analyzer working WOO! There are a few cases it’s missing but the hard stuff (iteration) is done and there’s enough that I can start looking at how to use it

So a refresh:

- On the cpu side I have a tree of transforms representing different spaces (like a lightweight scenegraph)

- I want to use these in a sane way on the gpu

- I cant afford any runtime logic becuase then we are pissing performance up a wall.

- Matrices describe transforms between spaces. We are interested in the spaces yet we have no explicit way of handling them.

- If we could use spaces in our shaders and at compile time turn them into matrices (that are then uploaded as uniforms) then things would be much clearer.

- To be able to resolve this at compile time we need a flow analyzer…which we now have, yay!

So the next question was how to use this.

position is a point (vector) with a space. Position is a compile time construct which will be compiled to a regular vector

space will be the name of the type. Spaces will be passed up as uniforms

(defun-g test ((vert :vec4) &uniform (world space))

...)

I was imagining a transform function that let you transform a position from one space to another:

(transform pos world-space clip-space)

This is kinda dumb though as the position knows what space it is in. So maybe

(transform pos clip-space)

Hmm, might be ok. What would be sweet though is a scope where everything inside is guaranteed to be in a certain space

(in world-space

(some code)

(more code))

wait though, this makes transform redundant as

(in clip-space pos)

Would transform the pos to clip-space, and it would return as it’s the last thing in the scope. Sweet!

in is also nicer to write than transform so I think we have a winner.

Note on making positions: in cepl/varjo vectors are made with the v! function. As in (v! 0 1 2) returns a vector3. Position will have a similar interface but it will expect a space, either as an argument or taken from the current in scope. So (p! 0 1 2 world-space) gives you a position3 in world space. (in world-space (p! 0 1 2)) does the same (I may kill the first version so in is the only way to do it)

So assuming this worked how does cepl use it?

My current thinking is that cepl will iterate over the function calls looking for calls involving spaces and positions. It then works out the matrices needed for each calls, de-duplicates the result and adds them as uniforms. Intermediate calculations need to be added to the source code (which I havent worked out the details for yet) and then the new lisp shader code (now without any spaces or positions) is recompiled. The result of this second pass the the shader uploaded to the gpu.

I’m off to america this week so I have no idea if I will get any of this done. We shall see :)

The flow analyzer LIVES (a bit)

Had a couple of days off coding but now I’m back. Yet again Ι found limits in the my compiler’s architecture, this time in how I handled environments.

So before what Ι had was roughly as follows:

I have a tree of code that needs compiling. The compiler walks down the tree and compiles each form. The environment is simply an object that holds all the variables and functions that exist at that point in the program. To implement variables scope I simply copy the environment as it was at the start of the scope, add the new variable/s and then throw it away at the end. Because the stuff outside the scope only see’s the original environment it couldnt access variables from the inner scope.

This has worked great for ages but now by flow analyzer wants the answer to the question “what scope was this variable made in?” and that data has been thrown away.

So now I’ve switched to a tree of environments, and they are now all immutable. This was a damn big change so Ι took the opportunity to start using a testing framework so that I could build basic tests while getting everything working again. This went really well so now Ι can show the following.

Let’s take this code. It’s useless but you can see that we make two vectors (that’s the v! forms) each starting with x

(defshader test ()

(let ((x 1))

(v! x 0)

(progn

(let ((z 3))

x))

(v! x 0 0 0)))

Now, if I let the flow analyzer do it’s work and inspect the data for the v! calls, I get something like.

(V! 514 515)

(V! 514 518 519 520)

The numbers are called flow-ids, they are used to track the flow of a value through a program. If two are the same then they are the same value (even if they get bound to different variables or passed through functions).

So above we can see that 514 appears as the id of the first arg for both v! forms, that means it’s not just the same variable (x) but the same value too.

Now in the following example we set x to be a different value (it’s in a progn and a let so I could see if it would track the flow of execution properly).

(defshader test ()

(let ((x 1))

(v! x 0)

(progn

(let ((z 3))

(setq x z)))

(v! x 0 0 0)))

The flow analysis of the v! calls in this program look like this

(V! 506 507)

(V! 509 510 511 522)

This time the flow-ids of the first argument are different. This shows that, even though they both take x, the system knows the value has been changed.

With these fixes Ι can finally handle conditions as the trees of environments are trivial to diff, so Ι will be able to combine the flow-ids from the two execution paths.

Thanks for reading!